In the evolving domain of Infrastructure as Code (IaC), the choice of tooling plays a pivotal role in orchestrating and automating tasks efficiently, as opposed to manually configuring resources. While there are IaC-specific tools available, the versatility of CI/CD tools like Jenkins opens up new paradigms in managing infrastructure.

This blog post delves into the rationale behind considering Jenkins with Terraform for IaC, showcasing its versatility in handling both continuous integration/continuous delivery (CI/CD) and infrastructure code deployments.

The heart of this tutorial beats around a practical example, where we leverage Terraform to provision an Azure VM, followed by Ansible orchestrating the setup of a monitoring stack comprising Prometheus and Grafana running on Docker. This hands-on approach aims at demystifying the orchestration of Terraform deployments using Jenkins, showcasing a real-world scenario.

Through this lens, we'll dissect why a CI/CD tool might be preferred over a dedicated IaC tool in certain environments, assessing the pros and cons of choosing Jenkins for IaC management.

Video Walkthrough

TL;DR: You can find the GitHub repo here

What is Jenkins?

Jenkins is an open-source automation server used for continuous integration and continuous deployment (CI/CD). It's a hub for reliable building, testing, and deployment of code, with a plethora of plugins, including the Jenkins Terraform plugin, to extend its functionality.

Jenkins, initially developed as Hudson by Kohsuke Kawaguchi in 2004, emerged out of a need for a continuous integration (CI) tool that could improve software development processes. When Oracle acquired Sun Microsystems in 2010, a dispute led the community to fork Hudson and rename it as Jenkins.

Since then, Jenkins has grown exponentially, becoming one of the most popular open-source automation servers used for reliable building, testing, and deploying code. Its extensible nature, through a vast array of plugins, and strong community support, has solidified its place in the DevOps toolchain, bridging the gap between development and operational teams.

Using Jenkins for IaC Management

Why would someone want to use a CI/CD tool like Jenkins in place of an IaC-specific tool? The thought of using Jenkins for IaC management stems from its capability to automate and structure deployment workflows and not just for continuous integration. Let’s dig deeper.

Why Consider Jenkins for IaC

From an architect's perspective, Jenkins might be considered for IaC management in scenarios where there's already heavy reliance on Jenkins for CI/CD, and the organization has developed competency in Jenkins pipeline scripting.

The decision to use Jenkins for IaC might also be influenced by budget constraints, as Jenkins is an open-source tool, and the cost of specialized IaC platforms can be prohibitive for smaller organizations.

However, when an organization's size and complexity grow, the hidden costs of maintaining and securing Jenkins, along with creating homebrewed code for scalability, can outweigh the initial savings.

Dedicated IaC Tools vs. Jenkins

Dedicated IaC tools such as env0 are built specifically for infrastructure automation. They provide out-of-the-box functionality for state management, secrets management, policy as code, and more, which are essential for IaC but are not native to Jenkins. These tools are designed to operate at scale, with less overhead for setup and maintenance.

Choosing Between the Two

An architect or a team lead must consider the trade-offs. If the organization's priority is to have a unified tool for CI/CD and IaC with a strong in-house Jenkins skillset, Jenkins could be a viable option.

However, for larger organizations governance, security, cost management, and scalability considerations could make dedicated IaC tools a more valuable long-term option.

In the end, the decision hinges on the organization's current tooling, expertise, and the complexity of the infrastructure they manage.

Here is a table to quickly summarize the pros and cons of this decision.

Jenkins Pros and Cons

Setting Up the Jenkins Job for Managing IaC

Setting up Jenkins for managing Terraform involves a series of steps. In the next few sections, you will learn how to go about:

- Creating a Jenkins server in a Docker container

- Initializing and setting up Jenkins

- Creating Azure credentials for Terraform to access Azure

- Jenkins pipeline script (Jenkinsfile)

- Terraform Configuration

- Ansible Configuration

The goal is to create a VM in Azure that hosts the Prometheus and Grafana monitoring stack. This VM will run docker and docker-compose to stand up these services.

Jenkins Server Installation Process

First off, let's take a look at how to install Jenkins in a Docker container.

Create the Jenkins Docker Container

Below is the Dockerfile for the Jenkins container. Notice how we install Terraform along with Ansible inside our container. For our demo and simplicity, we are using the Jenkins Server as a Jenkins node worker as well.

Building a scalable Jenkins architecture demands careful consideration and significant technical expertise, which can be a daunting challenge for teams whose primary goal is to implement an IaC solution efficiently. In contrast, a dedicated IaC platform like env0 removes the burden of managing the intricacies of the tool itself. env0 simplifies the process with a guided setup that abstracts the underlying complexities, allowing teams to focus on infrastructure management rather than tool configuration.

In a production Jenkins environment, you should install the Terraform binary along with Ansible and any other necessary binaries on the Jenkins nodes. Notice that we don't make use of the Terraform plugin in our demo.

Build the Docker Image

Now we can build our Terraform Docker image using the following command:

Next, let's run the Docker container with:

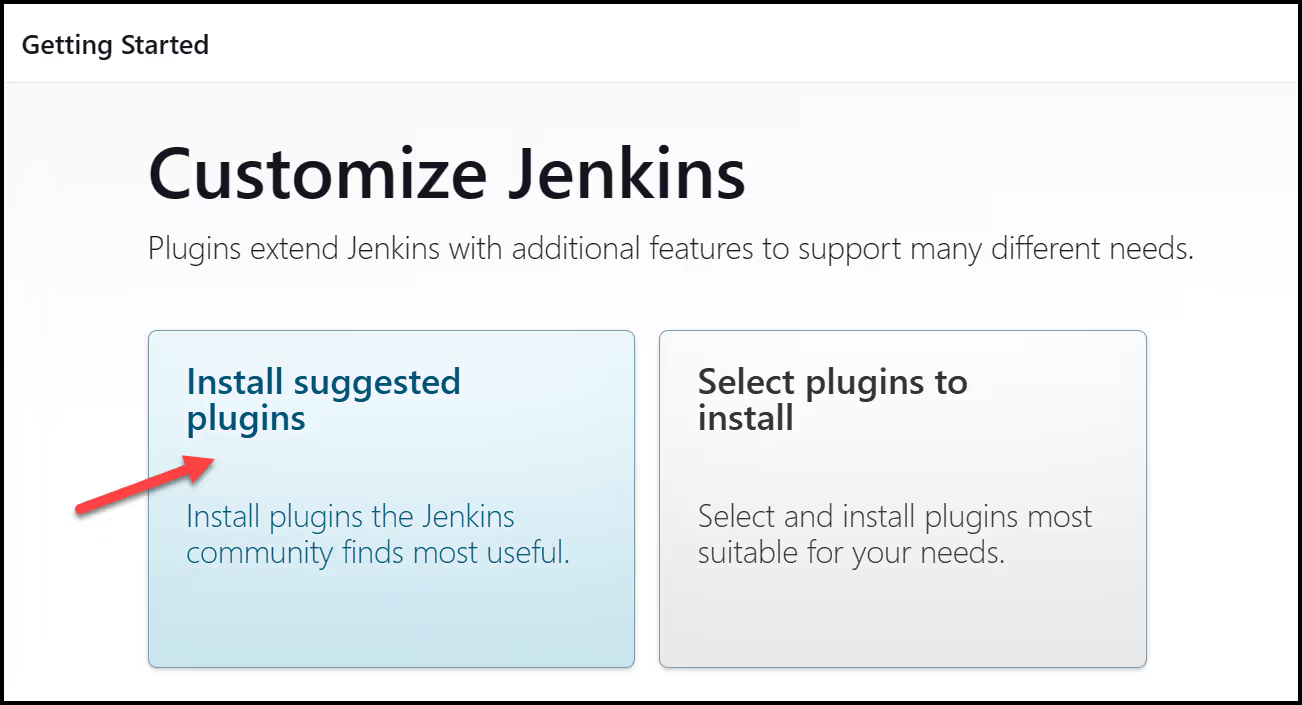

Configure Jenkins

Once the container is running, we can access the Jenkins UI at http://localhost:8080.

Notice that a password has been written to the log and inside our container at the following location: /var/jenkins_home/secrets/initialAdminPassword

You can access this password inside our container by running this command:

Then, install the suggested plugins:

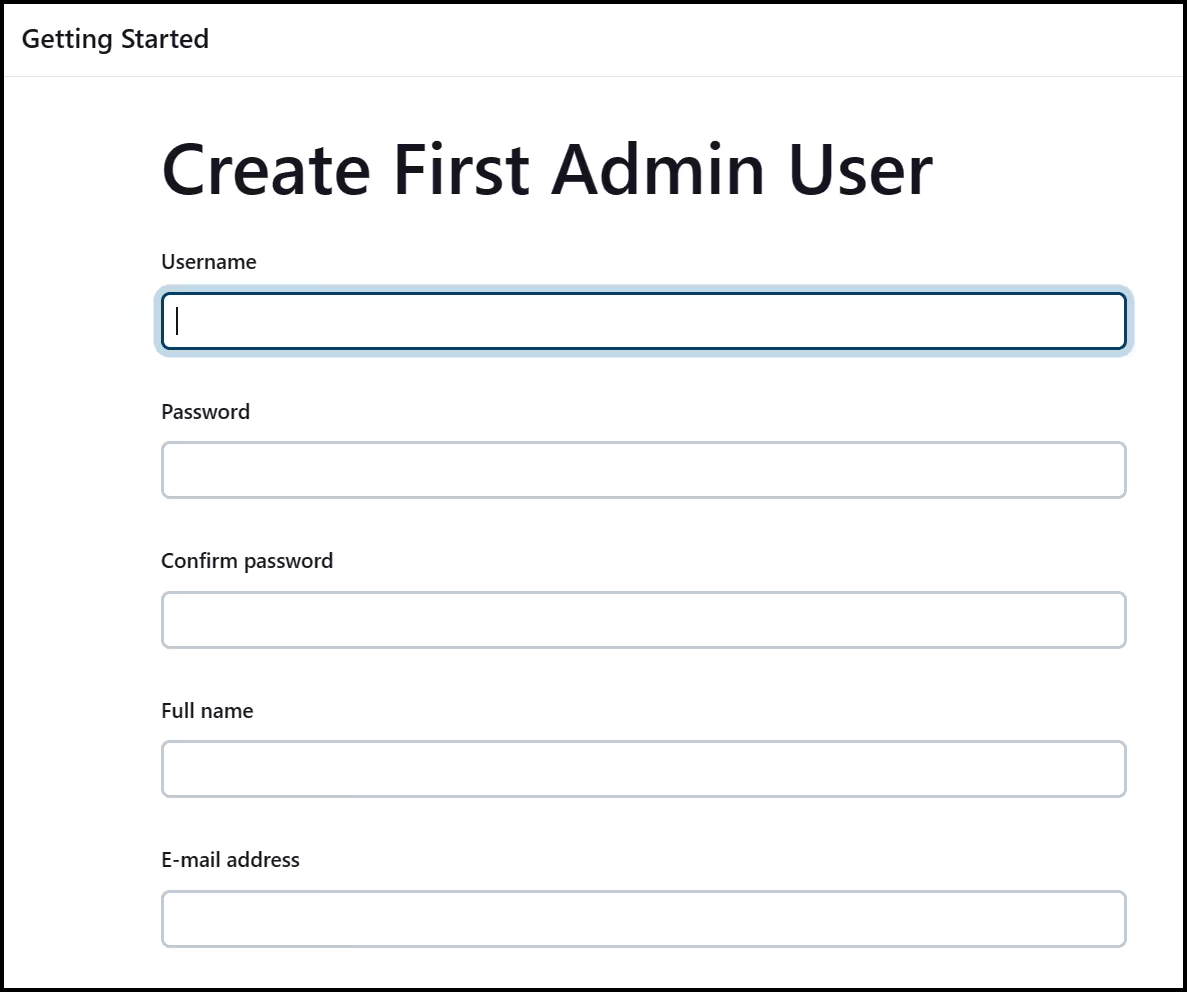

Then go ahead and create a first admin user

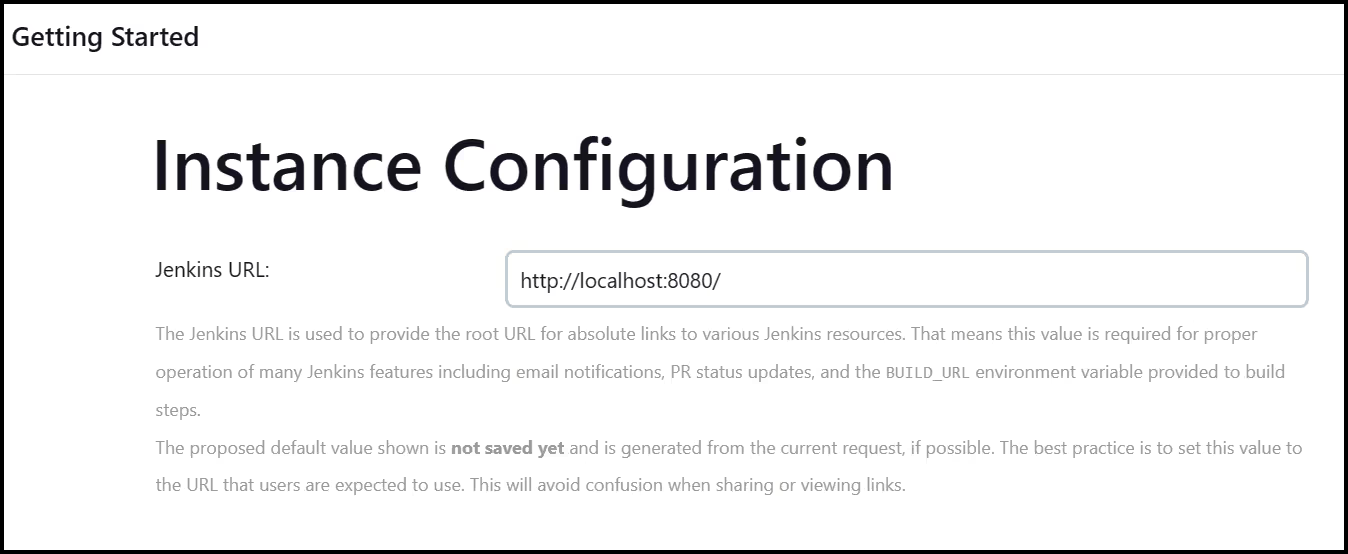

Next, keep the Jenkins URL as is, which should be http://localhost:8080, then save and finish the setup.

Create a Jenkins Job

From the main Jenkins page, click on the New Item button. Then give the pipeline a name and select the Pipeline project type.

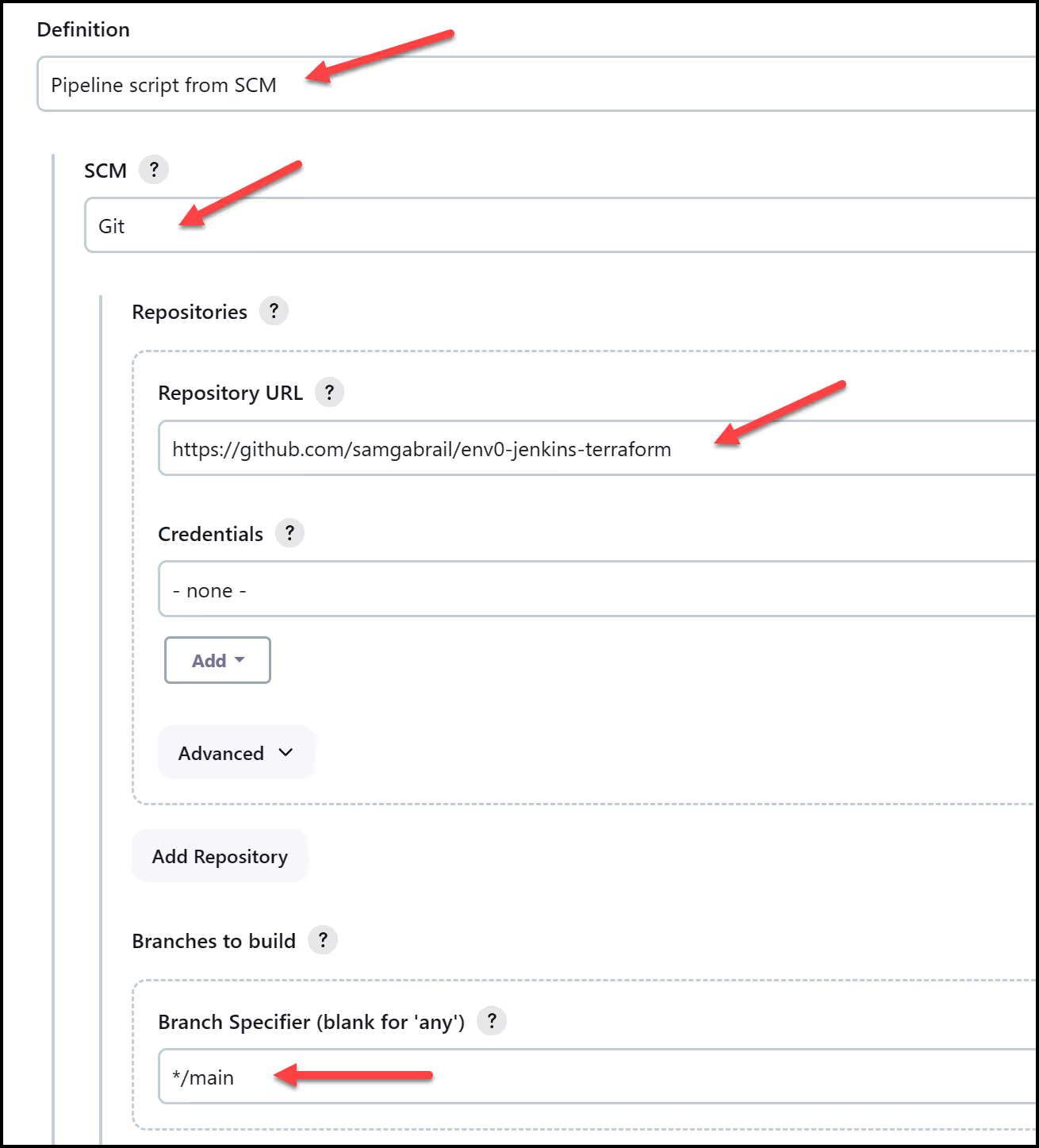

Now, fill out some details for this Pipeline job. You can add Poll SCM to configure Jenkins to poll GitHub regularly. The schedule follows the syntax of cron. For example, if we want to poll every two minutes we would use: [.code]H/2 * * * *[.code] Under "Pipeline", choose Pipeline script from SCM, Git for SCM, our Repository URL, and the main branch.

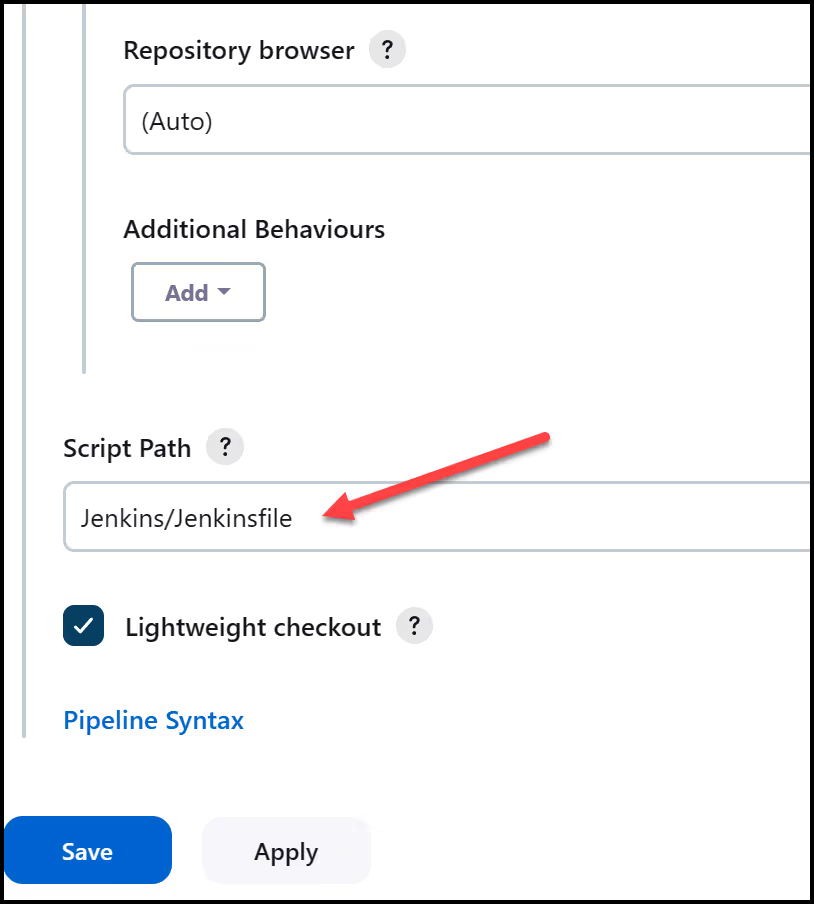

Finally, add the Jenkinsfile path as Jenkins/Jenkinsfile and click save.

Run the Jenkins Build

Let's go ahead and run our first build. Click the “▷ Build Now” button.

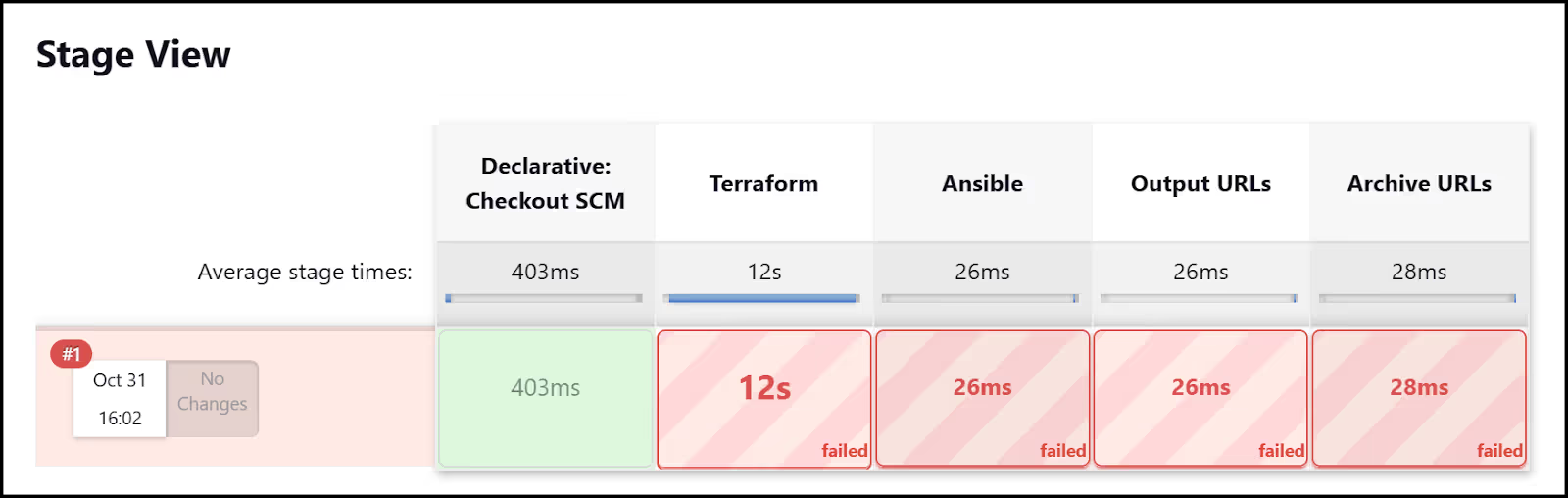

You should get an error in Stage View as shown below:

This is because we still haven't added the necessary credentials for Terraform to access Azure. Let's do that next.

Create Azure Credentials

Follow the guide Azure Provider: Authenticating using a Service Principal with a Client Secret to create an application and service principal. You will need the following for Terraform to access Azure:

- [.code]client_id[.code]

- [.code]client_secret[.code]

- [.code]tenant_id[.code]

- [.code]subscription_id[.code]

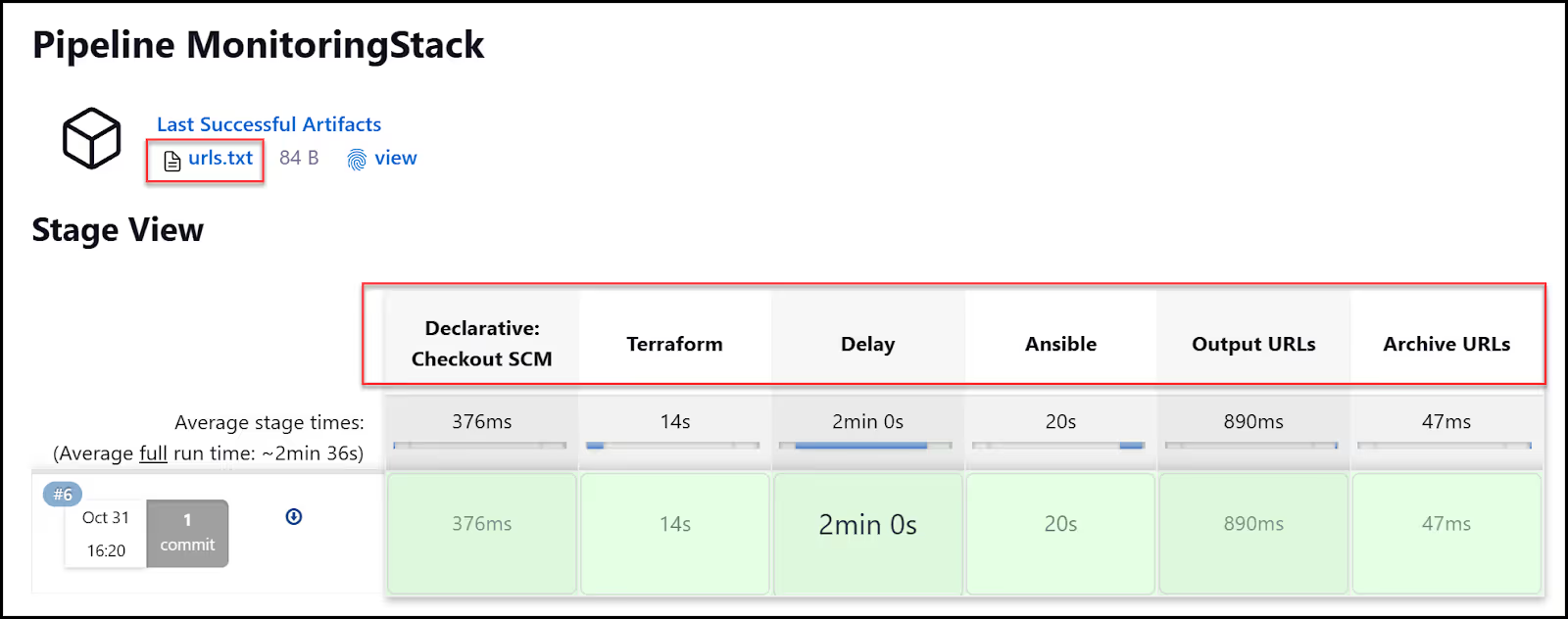

Now, let's add these credentials inside of Jenkins.

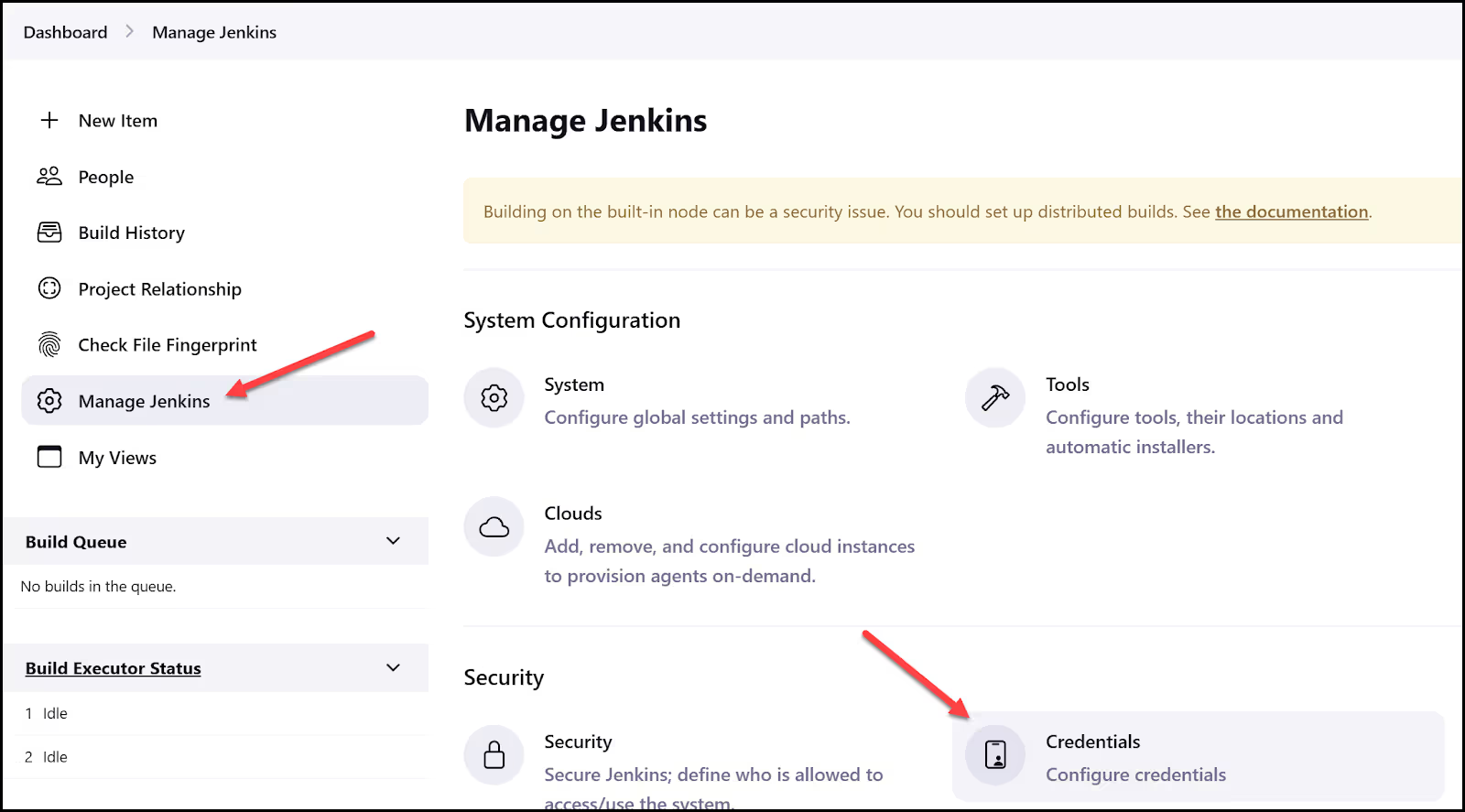

First, go to the "Dashboard > ⚙ Manage Jenkins > Credentials":

Go into the "System" store under the global domain and create five new credentials: four for Azure of type "secret text", then one SSH Username with a private key for Ansible in order to access our VM via SSH. This is what you should end up with:

Re-Run the Jenkins Pipeline

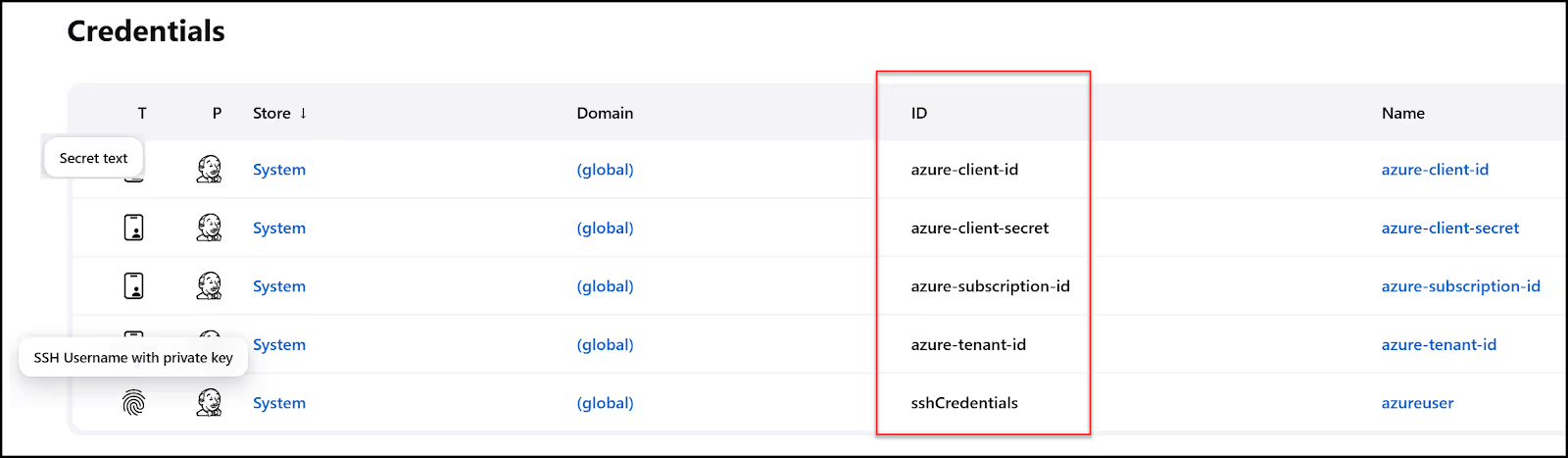

Now, let's go back to our pipeline and re-run it. Notice this time you get ‘Build wit3h Parameters’. Click on that and choose "Apply". Your build should succeed this time. It will go through the five stages we defined in our Jenkinsfile:

- Terraform (run Terraform to provision the VM in Azure)

- Delay of 2 minutes (wait for the ssh-agent to come up in the VM)

- Ansible (run ansible to configure the VM with Docker and start the monitoring services)

- Output URLs

- Archive URLs

RBAC Discussion

When we consider the Jenkins pipeline that I've demonstrated it's crucial to understand that this setup operates under a controlled demo environment. In real-world applications, especially within an organizational context, the need for robust Role-Based Access Control (RBAC) becomes significantly more important.

Why RBAC Matters

RBAC is central to maintaining security and operational integrity. It determines who has permission to execute, modify, or approve changes in the pipeline, which is critical in preventing unauthorized modifications and ensuring that infrastructure changes are peer-reviewed. This is not just about security; it's about stability and reliability. Without stringent RBAC, you risk having too many cooks in the kitchen, which can lead to configuration drift, security vulnerabilities, and operational chaos.

Jenkins and RBAC

In Jenkins, implementing RBAC can be somewhat manual and often necessitates additional plugins. For instance, the Jenkins pipeline as configured for the demo does not inherently provide a detailed RBAC system. It can be tailored to do so, but this requires a deep dive into Jenkins' access control mechanisms and perhaps a reliance on the Role Strategy Plugin or similar to ensure that only authorized personnel can execute critical pipeline stages.

env0’s and RBAC

On the other hand, env0 offers a far more comprehensive and out-of-the-box RBAC solution. With env0, you can easily define who can trigger deployments, who can approve them, and who can manage the infrastructure. This granular level of control extends across all organizational layers, from team to project to environment, and integrates smoothly with SSO providers for streamlined user and group management.

In an environment where infrastructure as code (IaC) is no longer just a convenience but a necessity, env0’s RBAC system offers a more secure and controlled workflow, ensuring that every change is accounted for and authorized. This mitigates the risk of errors or breaches, which can have significant implications in a production environment.

Final Output

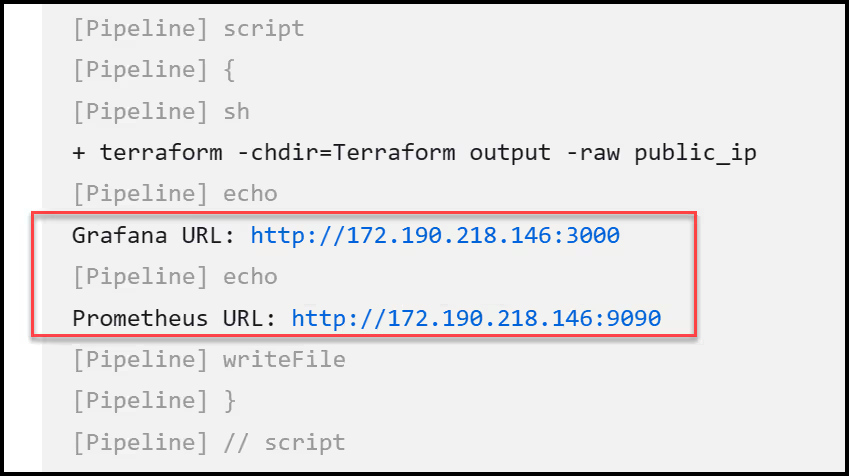

You can find the public URL for Grafana and Prometheus either by going into the Jenkins console logs or by checking the Jenkins artifacts for the urls.txt file. Here is the console output:

Here is the content of the urls.txt file:

Jenkins Pipeline Configuration

Let's dive into the Jenkinsfile – essentially our pipeline script – that orchestrates a monitoring setup using Terraform and Ansible. This is the conductor of our DevOps orchestra, tying everything together.

The Basics

Here, we kick off the Jenkins pipeline using any available Jenkins agent. We also set up a choice parameter for our Terraform actions. We can either apply to set things up or destroy to tear them down.

Environment Variables

We're loading some Azure credentials into environment variables. This is to allow Terraform to access Azure securely. Recall how we stored these credentials in the Jenkins server's secure store.

Terraform Stage

In this stage, we're running our Terraform job which contains the core Terraform commands. We initialize with [.code]terraform init[.code], validate with [.code]terraform validate[.code], and then apply or destroy based on our parameter. If we're applying, we also fetch the public IP for our Ansible inventory. Neat, right?

Delay Stage

I’ve added a delay stage here to allow the ssh-agent to start inside of the VM, otherwise Ansible will try to access the VM and will fail.

Ansible Stage

If we selected [.code]apply[.code] in our Terraform stage, this stage will run our Ansible playbook to configure the server. We do this securely using SSH credentials that we stored earlier in the Jenkins secure store. This means we allow Ansible to SSH into our Azure VM using the private key stored in Jenkins.

This stage outputs the Grafana and Prometheus URLs for us in the console logs. It even writes them to a text file. So we can store them as an artifact.

Archive URLs Stage

Finally, we archive the URLs text file as an artifact to reference later.

Terraform Configuration Files

Now that we have Jenkins running, we can configure Terraform. All the Terraform configuration files are found in the Terraform folder in our repo. Below are the contents.

Main.tf

The Basics

The main.tf Terraform file has all the Terraform code to create resources on Azure, like a resource group and a Linux VM. It's broken down into different sections: terraform, provider, resource, and output.

The Terraform Block

Here, we specify what providers are required. For this script, we are using the AzureRM provider and locking it down to version 3.77.0.

The Provider Block

This block initializes the Azure provider. The [.code]features {}[.code] is essential even though we don't need to fill it.

The Resource Group

This part is creating an Azure Resource Group called [.code]MonitoringResources[.code] in the [.code]East US[.code] location.

The Linux Virtual Machine

This is the meat of the script!

Here, we're spinning up a Linux VM with the name "MonitoringVM". The VM will reside in the same resource group and location as specified earlier. We're setting it to a Standard_B2s size, which is a decent balance of CPU and memory.

Notice the [.code]admin_ssh_key[.code] block? It uses the public key from the file id_rsa.pub. This is super important for secure SSH access which is needed for Ansible.

The Output Block

This output block just spits out the public IP of the VM once it's up. We will need this to update our Ansible inventory file.

Networking.tf

Alright, let's dive into the Terraform code in the networking.tf file! This Terraform script is all about laying down the networking groundwork for our Azure setup. It defines how our virtual network, subnet, and security rules come together.

Virtual Network (azurerm_virtual_network)

We're setting up a Virtual Network (VNet) named "MonitoringVNet". This VNet is where our resources like VMs will reside. The [.code]address_space[.code] is set to 10.0.0.0/16, giving us a nice, roomy network to play with.

Subnet (azurerm_subnet)

Within that VNet, we're carving out a subnet. The [.code]address_prefixes[.code] is 10.0.1.0/24, so all our resources within this subnet will have an IP in this range.

Network Interface (azurerm_network_interface)

Here, we're creating a network interface card (NIC) named "MonitoringNIC". This NIC is what connects our VM to the subnet. We're dynamically assigning a private IP address here.

Public IP (azurerm_public_ip)

We're also setting up a public IP address with dynamic allocation. We'll use this to access our monitoring VM.

Network Security Group (azurerm_network_security_group)

Let's not forget about security! We set up a Network Security Group (NSG) and defined rules for inbound traffic. We've set up rules for Grafana, Prometheus, and SSH, specifying which ports should be open.

NSG Association

Finally, we're associating the NSG with our subnet. This means the rules we defined in the NSG will apply to all resources in this subnet.

id_rsa.pub

This file is used for SSH access. It's the public key for the private SSH key that Ansible will use to SSH into the VM for configuration management. Terraform will include this file in the Azure VM.

Terraform State

It's important to note that the Terraform state file (terraform.tfstate) gets stored in the workspace folder in the running Jenkins worker node.

This is not desirable in a production environment, though. You’d need to store the state file in a secure remote location accessible only to your team. This can be a private S3 bucket or Azure blob that encrypts the content. Or you could opt to use an IaC tool such as env0 that takes care of storing and managing state files securely.

Ansible Configuration Files

How about we take a look at the Ansible configuration files? They are located inside the Ansible directory and the contents are:

ansible.cfg

The file contains configuration settings that influence Ansible's behavior. These settings are grouped into sections, and the [defaults] section is what we're focusing on here.

By setting [.code]host_key_checking = False[.code], we're telling Ansible not to check the SSH host key when connecting to remote machines. Normally, SSH checks the host key to enhance security, but this can get annoying in environments where host keys are expected to change, or where we aren't super concerned about man-in-the-middle attacks.

Remember, though, disabling this check can make our setup less secure. It's like saying, "Yeah, I trust you," without asking for an ID. We are fine with this here in the context of our demo.

appPlaybook.yaml

Let's get down to breaking apart our appPlaybook.yaml Ansible playbook. It's designed to set up a monitoring stack with Prometheus and Grafana on our Azure VM.

The Overview

We're targeting all hosts [.code](hosts: all)[.code]. Also, we're elevating our permissions to root with [.code]become: true[.code] and [.code]become_user: root[.code]. Now, let's jump into the tasks.

Installing pip3 and unzip

We're kicking things off by installing pip3 and unzip. We also update the cache.

Adding Docker GPG apt Key

Before installing Docker, we're adding its GPG key for package verification. Standard security practices.

Adding Docker Repository

Next, we're adding Docker's apt repository to our sources list. This allows us to install Docker directly from its official source.

Installing docker-ce

Time to install Docker! We're installing the latest version of docker-ce.

Installing Docker Python module

We're also installing the Docker Python module so we can interface with Docker using Python scripts.

Creating and Setting up Docker Compose

We're creating a directory for our Docker Compose file and then copying the Compose config into it. The config sets up Prometheus on port 9090 and Grafana on port 3000.

Running Docker Compose

Finally, the grand finale! We're running [.code]docker compose up -d[.code] in the directory where our Docker Compose file resides, bringing up our monitoring stack.

Conclusion

In this exploration of using Jenkins to manage Terraform, we've witnessed the flexibility of Jenkins in the realm of continuous development automation. It stands tall as a popular choice for CI/CD. Through a Jenkins pipeline, we seamlessly orchestrated the provisioning of an Azure VM and its configuration to host our monitoring stack.

As seen in our hands-on example, it adapts well to managing IaC with Terraform and Ansible but does fall short in some key ways.

Using Jenkins to manage Terraform or your IaC is tempting due to the mentioned pros in this article, however, it's essential to note that Jenkins isn’t purpose-built for IaC management.

Taking on a purpose-built IaC platform such as env0 offers the flexibility you get with Jenkins in addition to a feature-rich IaC platform out-of-the-box, making IaC management a breeze.

Check out this article, env0 - A Terraform Cloud Alternative, I wrote on using Terraform with Ansible all with env0 to show you how env0 can run a similar workflow to the one in this demo with added features.

Explore the vast array of features env0 offers for IaC management. Below is a small sample:

- Automated deployment workflows: env0 automates your Terraform, Terragrunt, AWS CloudFormation, and other Infrastructure as Code tools.

- Governance and policy enforcement: Enforce Infrastructure as Code best practices and governance with approval workflows, full and granular RBAC, and multi-layer Infrastructure as Code variable management.

- Multi-cloud infrastructure support: env0 supports multi-cloud infrastructure, enabling you to manage cloud deployments and IaC alongside existing application development pipelines.

- Cost optimization: env0 provides cost management features that help you optimize your cloud deployments.

- Team collaboration: env0 enhances team collaboration by providing end-to-end IaC visibility, audit logs, and exportable IaC run logs to your logging platform of choice.

Note: Future releases of Terraform will come under the BUSL license, while everything developed before version 1.5.x remains open-source. OpenTofu is a free variant of Terraform that builds upon its existing principles and offerings. Originating from Terraform version 1.5.6, it stands as a robust alternative to HashiCorp's Terraform.

To learn more about Terraform, check out this Terraform tutorial.

.webp)