Deploying a Kubernetes cluster is a complex task. Even when we have the possibility to use a managed cluster, there is still the need to deal with the different requirements and flavors of each cloud provider.

To streamline the cluster deployment process, a proficient engineer will use the modern approach — infrastructure as code tools. Using IaC tools is the easiest way to configure a well-defined and structured setup we can re-use whenever needed. This way we can be confident we’ll get the same cluster setup every time.

So this all sounds wonderful, right? Well, almost. When dealing with setting up a cluster, there is a need to use many IaC tools. Today we have different tools for deploying a cluster (Terraform, Pulumi, CloudFormation) and for configuring it (Kubernetes manifests) so if we want to streamline the entire process, we’ll need to do it in distinct steps using the different tools.

In env0, we support many IaC tools as well as defining a single workflow that helps unify and streamline Kubernetes cluster deployments. Let’s go through a common example where we’ll be using Terraform to deploy an AWS EKS cluster, and then use kubectl to install and configure Prometheus monitoring on our cluster.

Let’s start by defining the moving parts of our workflow. For this, we need to define three env0 templates:

- A Terraform template for the AWS EKS cluster and its prerequisites

- Kubernetes template for Prometheus operator prerequisites (according to the guide on repository)

- Kubernetes template for the actual Prometheus operator installation

- Workflow template that aggregates both

Terraform template - EKS cluster

- We first need to create the EKS cluster using Terraform. In the env0 UI, we’ll create a new template and select Terraform as its type.

- After that, we’ll integrate the env0/k8s-modules GitHub repository with env0. This repository includes some Terraform module presets that will assist us in deploying the EKS cluster with ease.

- We’ll hit next once, skipping the Variables step, which allows us to require or predefine environment variables for every environment created from this template (we’ll soon get to the setting required variables)

- We’ll hit next once again and assign the template to our relevant env0 project! Yay, we’re done! How easy was that?!

Kubernetes templates - Prometheus operator and its prerequisites

- First, we must attach an `env0.yml` file that will allow us to execute the required authentication commands in order to connect to our EKS cluster before running any kubectl commands against it. Since we want to use the prometheus-operator/kube-prometheus repository, we must fork it and push an env0.yml file to its root folder. Here’s how the file will look like:

version: 1

deploy:

steps:

setupVariables:

after: &login-k8s

- aws eks update-kubeconfig --name $CLUSTER_NAME

destroy:

steps:

setupVariables:

after: *login-k8s

This will make env0 execute the aws eks CLI commands after the step of setting up the required variables and right before executing the kubectl diff and kubectl apply/delete commands. This will take place when an environment gets deployed as well as when it gets destroyed. I’ve also used the CLUSTER_NAME placeholder, which will be populated later on using env0 as an environment variable.

- We now need to create the Prometheus operator using the kubectl CLI. For that, we’ll create two different Kubernetes templates, according to the required steps of the operator’s installation guide:

a. We’ll start with the prerequisites template. First, we’ll choose the Kubernetes template type:

b. Then we’ll set the VCS details of the template to use the env0/kube-prometheus Github repository we just forked, and set the Kubrenetes folder to point to the manifests/setup folder which includes some CRDs that are required by the prometheus operator.

In the same way we did with the previous template, we’ll skip the variables step and assign the template to our env0 project.

- Now we’ll need to repeat the same steps for the Prometheus operator template, based on the installation instructions of the repository.

We’ll configure another Kuberentes template called Prometheus Operator - Installation with the same settings except for the Kuberentes folder configuration, which will now point to the manifests folder instead (and not the manifests/setup folder).

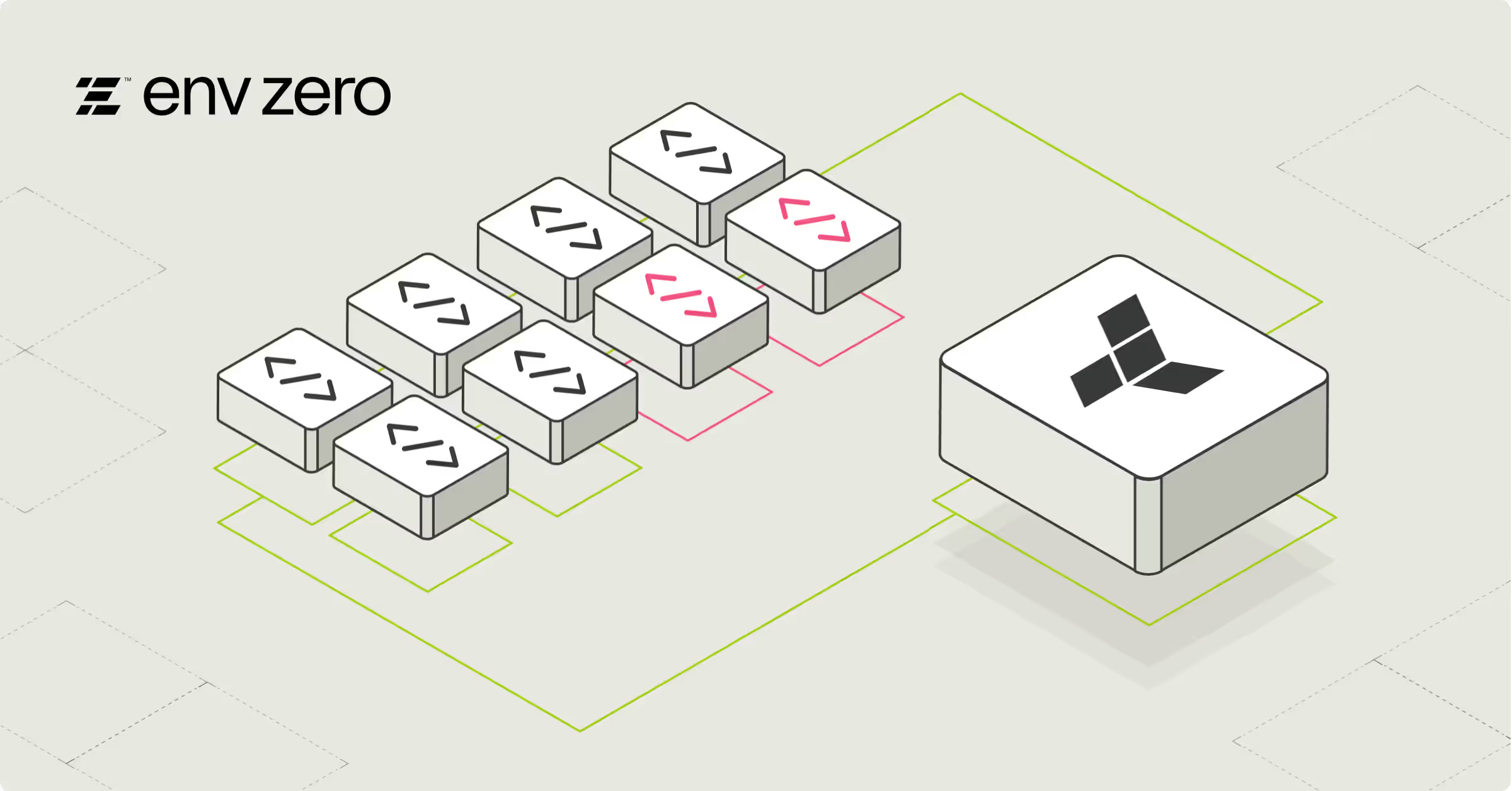

After we’ve created all the required templates for the EKS cluster and the Prometheus Operator installation steps, we can define the workflow to establish the dependencies between all the templates.

Workflow template

As described, we need to also create a template that streamlines both templates into a single workflow, for that we’ll need to do two things:

- Push an `env0.workflow.yml` file to a new GitHub repository, defining the required workflow:

environments:

eks:

name: eks

templateName: 'EKS Cluster'

prometheus-prereq:

name: prometheus-prereq

templateName: 'Prometheus Operator - Prerequisites'

needs:

- eks

prometheus-install:

name: prometheus-install

templateName: 'Prometheus Operator - Installation'

needs:

- prometheus-prereq

This yaml file describes the dependency between the Prometheus operator environment (with its installation steps in place) and the EKS cluster environment. This dependency means that the EKS cluster template will be deployed first, followed by the Prometheus prerequisites template first, and then the Prometheus operator template.

- Add a workflow template that points to this yaml file:

- After hitting next, we’ll get to the Variables step. This step will allow us to define some required environment variables, forcing everyone who uses this template to supply them before deploying. As you probably already guessed, I’m talking about AWS credentials - these are needed both for our EKS cluster setup and for the aws eks CLI authentication command. Besides that, we also need to supply a CLUSTER_NAME variable, which will create the EKS cluster with a unique name and use it in the env0.yml file we created for the Kubernetes authentication.

Deploying it all

After creating all the required templates, I’ll go ahead and deploy the workflow environment. Here’s how the deployment graph looks:

Right after deployment is done, I can click each of the environments to see their status and deployment logs. Here’s how the Prometheus Operator Prerequisites environment looks:

Cluster Visibility

After deploying your infrastructure and k8s resources, you need a way to understand the cluster and resource health, because sometimes troubleshooting issues in k8s is challenging without the right tool. We recommended using a specialized k8s troubleshooting and automation agent (e.g. Komodor) that can quickly identify, and resolve issues and take actions from the platform to reduce context-switching. This agent should monitor the cluster, detect anomalies and performance degradation, and show you a cluster change timeline. Better awareness means easier troubleshooting and less unplanned downtime.

Summary

Using templates and a workflow, we deployed an EKS cluster and installed our required basic setup on it. In this quick example, the setup only included a Prometheus Operator, but we could easily add any other Kubernetes project by configuring it as a template in env0. These templates could be deployed alongside the Prometheus operator or after it, allowing us to structure a clear dependency tree of different stacks using different IaC tools (env0 also supports Pulumi, Terragrunt and CloudFormation!)

Lastly, one thing you might notice from the steps here is that we’ve coded in the Kubernetes cluster authentication. Ordinarily, authentication is something you’d expect to be in the UI. For this initial release of Kubernetes support we opted for the fastest way to get the bulk of the functionality out for you to make use of. We have plans to integrate the authentication in the same way all our supported platforms have authentication support. So, we’d like your feedback! If you’re testing Kubernetes support here in env0 and would like to see that authentication rolled up into the UI, please drop us a line. You can simply ping us on Twitter if that’s easiest, or drop us an email to hello@env0.com

.webp)